Mark your calendars!

The 7th Edition is scheduled for November 17th and 18th, 2026 in Austin

Free Virtual Summit | October 6-7, 2025

Ticketed In-Person Summit | October 8-9, 2025 | Austin Renaissance Hotel

6th Annual MLOps World | GenAI Summit 2025

The event that takes AI/ML & agentic systems from concept to large-scale production

2 Days • 16 Tracks • 75 Sessions • Vibrant Expo

Why attend: Optimize & Accelerate

Build optimal strategies

Learn emerging techniques and approaches shared by leading teams who are actively scaling ML, GenAI, and agents in production.

Increase project efficiency

Minimize project risks, delays, and missteps by learning from case studies that set new standards for impact, quality, and innovation. Tools for Agent driven apps, multi agent systems, & AI assisted development.

Make better decisions

Make better, faster decisions with lessons and pro tips from the teams shaping ML, GenAI and Agenetic AI systems in production.

Why attend

Technical Workshops

- Workshop: Context Engineering – Practical Techniques

- Workshop: Building AI Agents from Scratch

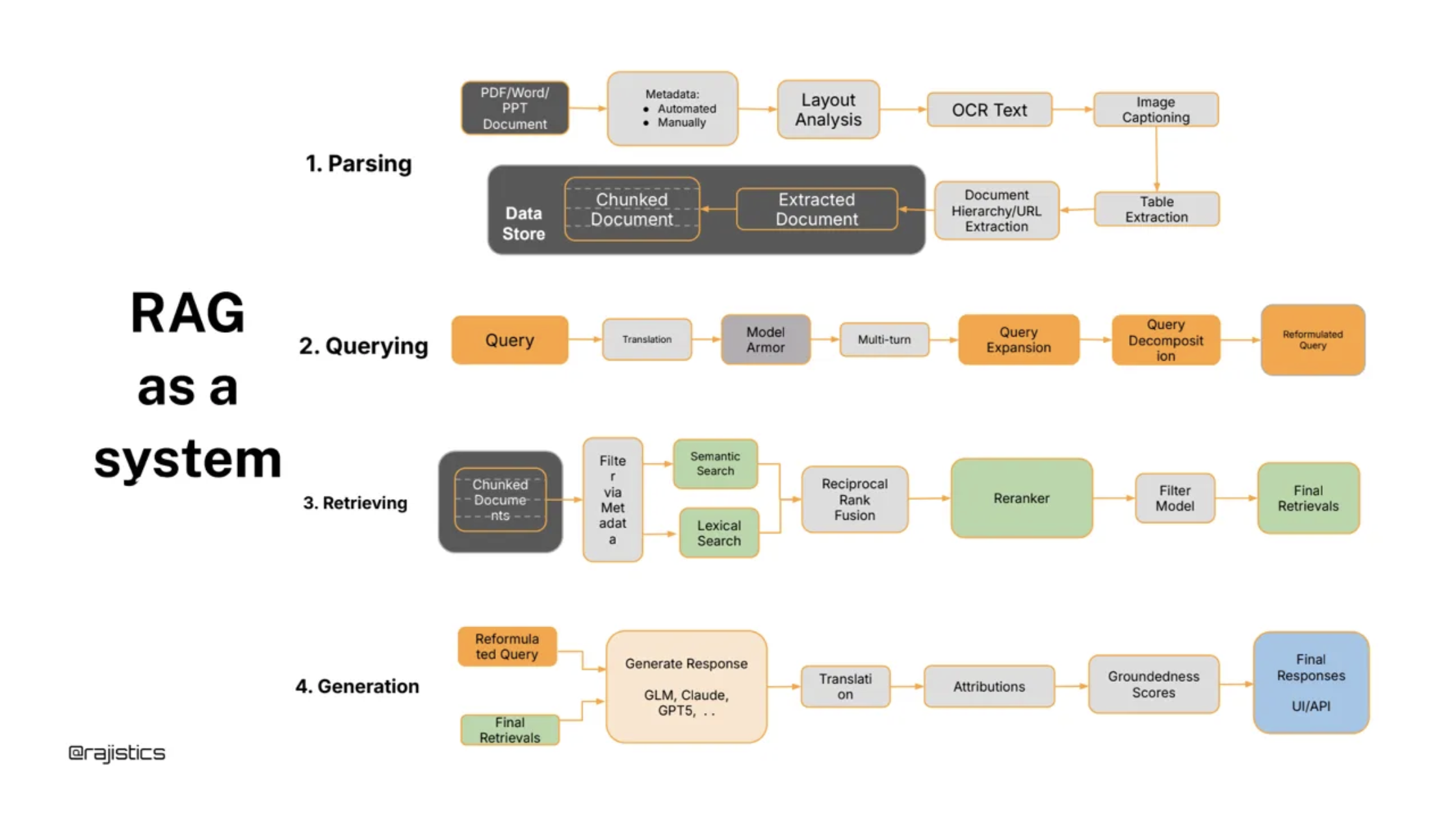

- Workshop: Managing RAG

- Workshop: Vibe-Coding Your First LLM App

- Workshop: Conversational Agents with Thread-Level Metrics

Industry Case Studies & Roundtable Discussions

- Case study + Discussion Groups on

- SLMs + Fine-Tuning: with Arcee

- Sustainable GenAI Systems at Target

- Explainable AI at Fujitsu

- Agentic Workforces at Marriot International

- Agent-Powered Code Migration at Realtor.com

and more!

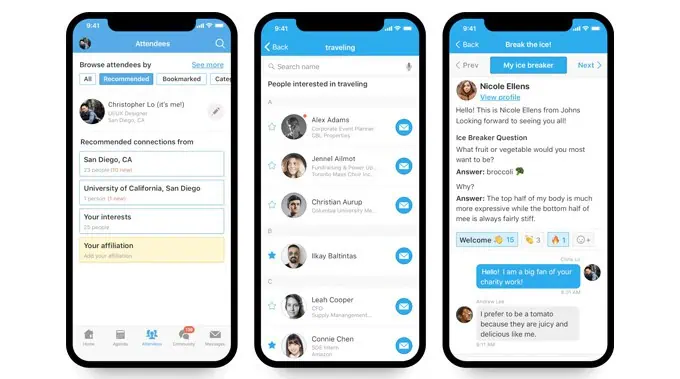

Community Event App

Meetup 800+ attendees and build your network onsite. See all attendees, connect with speakers. Share your story, build your community.

2 Days of workshops, Case studies, Discussions & Socials

Learn from leading minds, sharpen your skills, and connect with innovators driving safe and effective AI in the real world.

Free Online Stage

- Skills Workshops (Oct 6)

- Expert Sessions (Oct 7)

Day 1

- Summit:

- Talks, Panels, & Workshops

- Expo:

- Lightning Talks

- Brain Dates

- Community Square

- Startup Zone

- Vendor Booths

- Opening Party

Day 2

- Keynote

- Summit:

- Talks, Panels, & Workshops

- Expo:

- Lightning Talks

- Brain Dates

- Community Square

- Startup Zone

Why attend: Connect & Grow

Grow industry influence

Equip your team to win

Stay ahead of fast-moving competitors by giving your team the insights, skills, and contacts they need to exceed expectations.

Build career momentum

Make every hour count by using our event app to hyper-focus on the right topics and people who will help shape your future in AI.

2025 Summit: Full-Spectrum AI

All themes, talks, and workshops curated by top AI practitioners to deliver real-world value. Explore sessions

2025 THEME: AI Agents & Agentic Workforces

AI Agents for Developer Productivity

This track highlights practical uses of agents to streamline dev workflows—from debugging and code generation to test automation and CI/CD integration.

AI Agents for Model Validation and Deployments

Agents can now assist in model testing, monitoring, and rollback decisions. The track focuses on how teams are using autonomous systems to harden their ML deployment workflows.

Augmenting Agentic Workforces

This track explores how teams are combining human oversight with semi-autonomous agents to scale support, operations, and decision-making across the business.

Agents in Production

Latest Trends in MLOps

2025 THEME: MLOps & Organizational Scale

Governance, Auditability & Model Risk Management

This track covers how teams manage AI risk in production—through model governance, audit trails, compliance workflows, and strategies for monitoring model behavior over time.

MLOps for Smaller Teams

Not every team has a platform squad or unlimited infra budget. This track shares practical approaches to shipping ML with lean teams—covering lightweight tooling, automation shortcuts, and lessons from teams doing more with less.

ML Lifecycle Security

ML Training Lifecycle

Scoping and Delivering Complex AI Projects

2025 THEME: LLM Infrastructure & Operations

LLMs on Kubernetes

This track covers the key architectural choices and infra strategies behind scaling AI and LLM systems in production—from bare metal to Kubernetes, GPU scheduling to inference optimization. Learn what it really takes to build and operate reliable GenAI and agent platforms at scale.

ML Deployments on Prem

LLM Observability

Data Engineering in an LLM Era

Inference Optimization & Scaling

Multimodal Systems in Production

Our Expo is where innovation, ideas, and connections come to life

Transform from attendee to active participant by leveling-up your professional contacts, exchanging ideas, and even grabbing the mic to share a passion project.

Make New Connections

Connect with AI Practitioners

Brain Dates

Speakers' Corner

Vendor Booths

Community Square

Startup Zone

Hands-on Sessions

Austin Parties

Expo Expo Expo Expo Expo Expo Expo Expo Expo Expo Expo Expo

40+ Technical Workshops and Industry Case Studies

Past Event Speakers

Meet the experts bringing techniques, best practices, and strategies to this year’s stage.

Irena Grabovitch-Zuyev

Staff Applied Scientist, PagderDuty

Testing AI Agents: A Practical Framework for Reliability and Performance

Eric Riddoch

Director of ML Platform, Pattern AI

Insights and Epic Fails from 5 Years of Building ML Platforms

Linus Lee

EIR & Advisor, AI, Thrive Capital

Agents as Ordinary Software: Principled Engineering for Scale

Niels Bantilan

Chief ML Engineer, Union.ai

A Practical Field Guide to Optimizing the Cost, Speed, and Accuracy of LLMs for Domain-Specific Agents

Tony Kipkemboi

Head of Developer Relations, CrewAI

Building Conversational AI Agents with Thread-Level Eval Metrics

Denise Kutnick

Co-Founder & CEO, Variata

Opening Pandora’s Box: Building Effective Multimodal Feedback Loops

Zachary Carrico

Senior Machine Learning Engineer, Apella

A Practical Guide to Fine-Tuning and Deploying Vision Models

Paul Yang

Member of Technical Staff, Runhouse

Why is ML on Kubernetes Hard? Defining How ML and Software Diverge

Freddy Boulton

Open Source Software Engineer, Hugging Face

Gradio: The Web Framework for Humans and Machines

Aleksandr Shirokov

Team Lead MLOps Engineer, Wildberries

LLM Inference: A Comparative Guide to Modern Open-Source Runtimes

Federico Bianchi

Senior ML Scientist, TogetherAI

From Zero to One: Building AI Agents From The Ground Up

Kshetrajna Raghavan

Principal Machine Learning Engineer, Shopify

Where Experts Can't Scale: Orchestrating AI Agents to Structure the World's Product Knowledge

Calvin Smith

Senior Researcher Agent R&D, OpenHands

Code-Guided Agents for Legacy System Modernization

Latest News

Why attend

Event Parties & Networking

Explore Frontier Tools & Startups

Give your team an edge with insights, skills, and connections from the industry’s top innovators —

click here to see the exhibiting sponsors.

Grow industry influence

Join Brain Dates, Speaker’s Corner, Community Square, or deliver a talk to share your expertise and amplify your industry impact.

Curated by AI Practitioners

All sessions and workshops have been hand-picked by a Steering Committee of fellow AI practitioners who obsess about delivering real-world value for attendees.

Denys Linkov

Event Co-Chair & Head of ML at WiseDocs

“We built this year’s summit around practical takeaways. Not theory but actual workflows, strategies, and the next three steps for your team. We didn’t want another ‘Intro to RAG’ talk. We wanted the things people are debugging, scaling, and fixing right now.”

Volunteering

Apply for the opportunity to get exclusive behind the scenes access to the MLOps World experience while growing your network and skills in real-world artificial intelligence.

Austin

Renaissance Austin Hotel

Once again our venue is the beautiful Renaissance Austin Hotel which delivers an exceptional 360 experience for attendees, complete with restaurants, rooftop bar, swimming pool, spa, exercise facilities, and nearby nature walks. Rooms fill up fast, so use our code (MLOPS25) for discounted rates.

Choose Your Email Adventure

Join our Monthly Newsletter to be first to get expert videos from our flagship events and community offers including the latest Stack Drops.

Join Summit Updates to learn about event-specific news like ticket promos and agenda updates as well invites to join our free online Stack Sessions.

Choose what works best for you and update your email preferences at any time.

Hear From Past Attendees

Data and AI Scientist, Consultant, Podcaster

Free Virtual October 6-7 | In-person October 8-9

What Your Ticket Includes

- Full access to Summit sessions – Day 1 (Oct 8) & Day 2 (Oct 9) in Austin

- Bonus virtual program – live talks and workshops on Oct 6 & 7

- Hands-on learning – in-person talks, virtual workshops, and skill-building sessions

- Food & networking – connect with peers over meals, socials, and receptions

- AI-powered event app – desktop & mobile access for networking and schedules

- Networking events – structured meetups and community mixers

- On-demand replays – access to all post-summit videos

- 30 days of O’Reilly online learning – unlimited access to books, courses, and videos from O’Reilly and 200+ publishers

Regístrate fácilmente y comienza a jugar en https://pentagol.com.pe/ donde las opciones de depósito rápido y pagos ágiles te permiten disfrutar de tus juegos favoritos sin demoras.

Past Agenda

This agenda is still subject to changes.

Join free virtual sessions October 6–7, then meet us in Austin for in-person case studies, workshops, and expo October 8–9

FAQ

When and where is the event?

The in-person portion of MLOps World | GenAI Summit takes place October 8-9, 2025 at the Renaissance Austin Hotel.

Address: 9721 Arboretum Blvd, Austin, TX 78759, United States See booking details.

What’s included with my ticket?

- Live training courses

- In-depth learning paths

- Interactive coding environments

- Certification prep materials

- Most major AI publications

Is there a virtual option?

What types of sessions can I expect?

Are there more active types of experiences?

How do I register?

Can I cancel or transfer my ticket?

Are discounts available for group ticket purchases?

Who typically attends MLOps World?

Will slides or recordings be available after the event?

Yes. The majority of presenters grant permission for their sessions to be recorded and shared. These recordings are made available after the event. The best way to be notified when new learning resources are released is by subscribing to our newsletter.

What if I have dietary or accessibility needs?

How do I apply to speak?

Submit your proposal via the Call for Speakers link in our site header (available ahead of each event) or subscribe to our newsletter for MLOps and other speaking alerts. Learn more

What kinds of talks are accepted?

Are speaker slots paid or unpaid?

What’s the speaker deadline for slides or submissions?

Do speakers get free tickets or travel support?

Can I speak virtually or only in-person?

Are sessions recorded? Will they be shared publicly?

What kind of A/V support or setup is provided?

What are the sponsorship packages and benefits?

How do I become a sponsor?

Visit our sponsor page to get more details and download our Sponsorship Guide, or contact Faraz Thambi at [email protected] to discuss availability and options.

What is the audience profile?

Attendees include ML/Data Engineers, Developers, Solution Architects / Principal Engineers, ML/AI Infra Leads, Technical Leaders, and Senior Leadership (Director, VP, C-suite, Founder) decision-makers from startups, scaleups, and enterprises across North America and around the globe.

Can I sponsor the virtual component or a specific track only?

How is lead capture handled?

Will there be booth space? How big? What’s included?

Yes. Booth packages vary in size depending on the tier; they range from a 20’x20’ island booth (Platinum) to a 6’ x 10’ draped booth (Bronze). Please see the guide for full specifications.

Can we run our own bespoke event or session?

Yes. We offer limited opportunities for sponsor-hosted workshops, roundtables, and after-hours events, pending approval and availability.

Are there other ways to get involved as a sponsor?

Yes, leading companies can apply to contribute discounts and free trials to our audience of AI/ML practitioners as part of our Stack Drop and Community Code programs. Learn more from our blog or email [email protected]